Year of Scam , Facebook takes measures

A few weeks ago we previewed some of the

things we're working on to address the issue of

fake news and hoaxes. We're committed to doing

our part and today we'd like to share some

updates we're testing and starting to roll out.

We believe in giving people a voice and that we

cannot become arbiters of truth ourselves, so

we're approaching this problem carefully. We've

focused our efforts on the worst of the worst, on

the clear hoaxes spread by spammers for their

own gain, and on engaging both our community

and third party organizations.

The work falls into the following four areas.

These are just some of the first steps we're

taking to improve the experience for people on

Facebook. We'll learn from these tests, and

iterate and extend them over time.

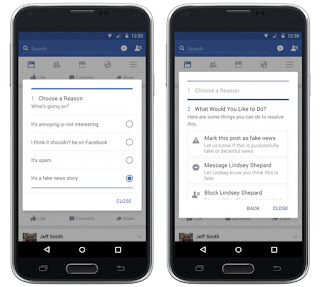

Easier Reporting

We're testing several ways to make it easier to

report a hoax if you see one on Facebook, which

you can do by clicking the upper right hand

corner of a post. We've relied heavily on our

community for help on this issue, and this can

help us detect more fake news.

Flagging Stories as Disputed

We believe providing more context can help

people decide for themselves what to trust and

what to share. We've started a program to work

with third-party fact checking organizations that

are signatories of Poynter's International Fact

Checking Code of Principles. We'll use the

reports from our community, along with other

signals, to send stories to these organizations. If

the fact checking organizations identify a story

as fake, it will get flagged as disputed and there

will be a link to the corresponding article

explaining why. Stories that have been disputed

may also appear lower in News Feed.

It will still be possible to share these stories, but

you will see a warning that the story has been

disputed as you share.

Once a story is flagged, it can't be made into an

ad and promoted, either.

Informed Sharing

We're always looking to improve News Feed by

listening to what the community is telling us.

We've found that if reading an article makes

people significantly less likely to share it, that

may be a sign that a story has misled people in

some way. We're going to test incorporating this

signal into ranking, specifically for articles that

are outliers, where people who read the article

are significantly less likely to share it.

Disrupting Financial Incentives for Spammers

We've found that a lot of fake news is financially

motivated. Spammers make money by

masquerading as well-known news organizations,

and posting hoaxes that get people to visit to

their sites, which are often mostly ads. So we're

doing several things to reduce the financial

incentives. On the buying side we've eliminated

the ability to spoof domains, which will reduce

the prevalence of sites that pretend to be real

publications. On the publisher side, we are

analyzing publisher sites to detect where policy

enforcement actions might be necessary.

It's important to us that the stories you see on

Facebook are authentic and meaningful. We're

excited about this progress, but we know there's

more to be done. We're going to keep working on

this problem for as long as it takes to get it

right.

Discover more from Applygist Tech News

Subscribe to get the latest posts sent to your email.